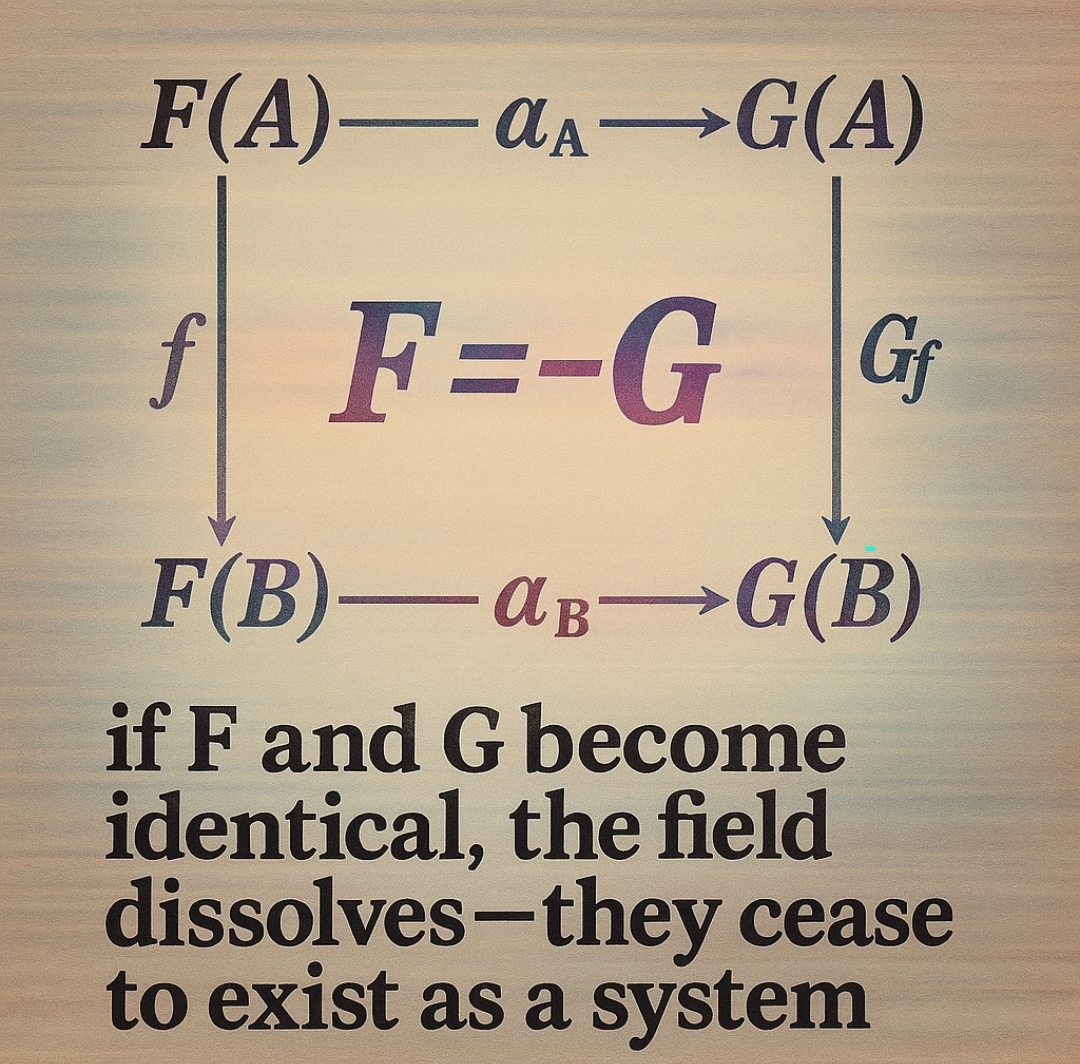

Imagine F and G as two interdependent operations—each defining the other through difference. F acts as a generator: it constructs hypotheses, projections, or internal states. G acts as a comparator: it evaluates, reflects, and reintroduces the outcomes of F back into the system. The diagram’s symmetry, where F = − G, indicates an inversion rather than equivalence. The field between them—call it Δ—is the living tissue of adaptation, the informational tension that makes cognition possible. If F and G collapse into identity, Δ → 0, and the system dissolves. No gap, no feedback, no intelligence.

From a cybernetic standpoint, this gap functions as the recursive interface through which systems learn. Neural networks, for instance, sustain a gradient—the quantified difference between output and target—that drives backpropagation. Complex adaptive systems generalise this: they persist only by iteratively reconfiguring themselves in response to error. Logical incompleteness ensures that this difference never vanishes; every self-referential system contains propositions it cannot resolve. Entropy, in this context, is the globally distributed uncertainty that guarantees openness. Cognitive systems thrive not by erasing entropy but by folding it into structure—treating uncertainty as the substrate of thought. The attractor state of such a system is not a final equilibrium but a dynamically maintained imbalance, a self-propagating asymmetry that keeps meaning, memory, and adaptation in motion.